Table of Contents

The topic has been presented at DEFCON30 - Adversary village (deck is available here)

TL;DR

Python provides some key properties that effectively creates a blindspot for EDR detection, namely:

- Python’s wide usage implies that a varied baseline telemetry exists for Python interpreter that is natively running APIs depending on the Python code being run. This can increase the difficulty for EDRs’ vendor to spot anomalies coming from python.exe or pythonw.exe.

- Python lacks transparency (ref. PEP-578) for dynamic code executed from stock python.exe and pythonw.exe binaries.

- Python Foundation officially provides a “Windows embeddable package” that can be used to run Python with a minimal environment without installation. The package comes with signed binaries.

An attacker could leverage the Python official Windows Embeddable zip package dropping it on disk and using the signed binary python.exe (or pythonw.exe) to execute a wide range of post exploitation tasks.

Having this in mind, a tool named Pyramid has been developed to demonstrate that one can bring useful capabilities into python.exe and can operate by successfully evading EDRs detection. Pyramid can execute the following techniques straight from python.exe or pythonw.exe:

- dynamically importing and executing Python-BloodHound and secretsdump.

- executing BOF (dumping lsass with nanodump).

- creating SSH local port forward to tunnel a C2 Agent.

The tool has been successfully tested against several EDRs, demonstrating that a blindspot is indeed present and it is possible to execute a range of capabilities from it. This technique has been dubbed Living-off-The-Blindspot.

Intro

EDRs are commonly encountered by red teamers during engagements and it is vital to know some concepts on how to operate under their scrutiny without being detected.

In an effort to find a way around several EDRs, the bypass problem has been analyzed looking in a more holistic way at the current defenses put in place by EDRs in order to find a novel strategy that could enable operating in blind spots, rather than bypassing a single defense mechanism.

EDRs Defenses

EDRs deploy several defenses in order to detect and respond to threats. The common requirement for all the defenses is visibility, since you can’t protect what you can’t see. Visibility can be understood as the EDR’s capability to properly process information aimed at gaining context for a specific status/action/language/technique on a system or network. Information can come from OS sources (such as AMSI or ETW) or via proprietary techniques.

In the following paragraphs will be provided some key concepts for every major Defense that must be took into consideration while thinking about a bypass strategy. This post is not meant to be an extensive explanation of each defensive measure since there are much better resources already available online (check here). Bear in mind that Defenses do not usually work in silos, information are shared among them in order to contribute in the detection of a malicious activity.

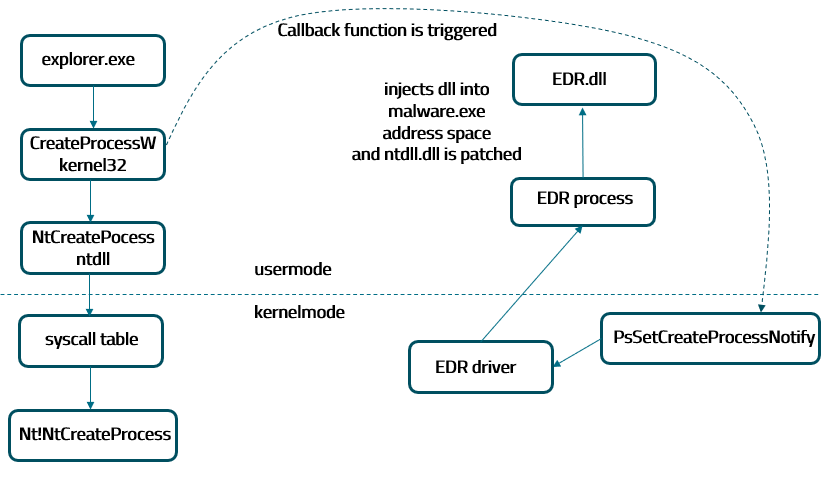

Kernel Callbacks and Usermode Hooking

Two common ways of increasing visibility for EDRs are Kernel Callbacks and Usermode Hooking.

Kernel Callbacks are commonly used to get information on processes and loaded images and to inject EDR’s dll into newly created processes (see example in the image below). The PsSetCreateProcessNotifyRoutine routine registers a Kernel Callback such that when a specific action occurs (i.e. process creation) the routine will send a pre or post-action notification to the Driver, that will then execute its callback. In the example below the Kernel driver will instructs the EDR process to inject the EDR’s dll into the newly created process, setting the groundwork for usermode hooking.

|

|---|

| Kernel Callback example |

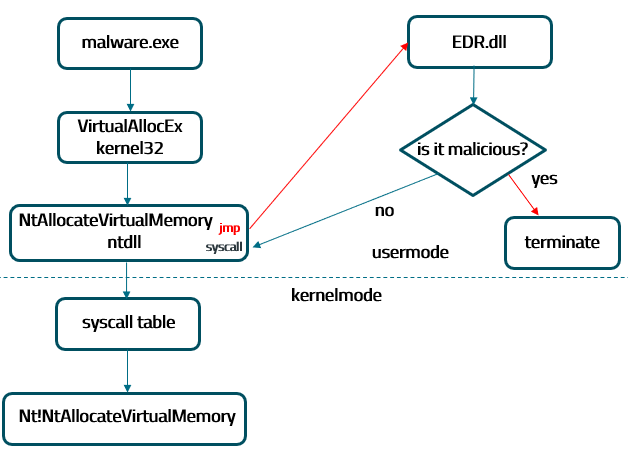

The EDR’s dll is then used mainly to perform Usermode Hooking patching ntdll.dll and inspecting specific Windows API calls made by processes to take some action if the call deemed as malicious.

|

|---|

| Usermode Hooking example |

Usermode hooking has at least two big limitations:

- EDRs do not hook every Windows API call for performance issues, instead they rely on hooking in the APIs that are mostly abused by malware.

- Hooking is also done in usermode, so every usermode program can theoretically undo the hooking.

Memory Scanning

Memory scanning techniques look for pattern in the code and data of processes. From an EDR point of view they are resource intensive, so one of the most common approach is to do timely or triggered scans based on events/detections/analyst actions.

From an attacker perspective, memory scans are dangerous because even a fileless payload once is executing its routines has to be in cleartext in memory. Recently, the offensive security community came up with techniques (such as ShellcodeFluctuation and Sleep mask for Cobalt STrike) to mitigate the risk of detection in memory, that basically obfuscate the code in memory after a payload is “sleeping” - i.e. not executing tasks and waiting to fetch command from C2 after a certain time.

However, the risk is still relevant while the payload is executing tasks and if a memory scan is triggered by malicious operations done by the payload, this may very well lead to a memory dump or a pattern matching between the cleartext version of the payload code and a set of known-bad signatures.

ML based detections

Machine Learning is an entire discipline and I don’t dare to cover it extensively since I am no expert at all and there are many other better resources elsewhere. However, we can focus on some key-concepts that are employed in ML detections that can be very useful in defining a bypass strategy. Starting with the very basics, we can say that Machine Learning can detect variant malware files that can evade signature-based detection.

Malware peculiar characteristics are translated into “features” and used for Machine Learning models training. Features can be static (idantifiable without executing samples) or dynamic (extracted at runtime). Basically, to detect malware using Machine Learning, one can collect large amount of malware and goodware samples, extract the features, train the ML system to recognize malware, then test the approach with real samples.

The features play an important role during the process because they are related to sample properties. Some common features to determine if a file is good or bad are if the file is digitally signed or if it has been seen on more than 100 network workstations. On the other hand, features used to determine if a file is bad could be the presence of malformed or encrypted data and a suspicious series of API calls made by the binary (dynamic feature).

The key concept here is that features have a “weight” into the decision process of a ML model (assigning weights to features is one of the ML training purposes). In layman terms, this means that features with a higher weight might bend the ML model decision toward malware or goodware more than other lower weight features. Security vendors do not publish weights nor the features used by their ML models, but as attackers we can think about at least one feature that can help evading detections: Digital Signature. It is in fact true that malware developers and operators often try to sign their malware to evade security solutions because this property is often used as a goodware feature by ML models and probably with a pretty good weight.

Another dynamic feature that can be abused by properly choosing the binary under which to operate is the API call sequence. This would work well for malware samples but

what about malicious code that gets executed in-memory by an interpreter?

In that case, the API call sequence made by the interpreter binary can be virtually everything because it depends on the code run by the intrerpreter. How are security vendors handling that? I don’t have exact answers to these questions but we can test EDRs behaviour and draw some conclusions.

IoCs and IoAs

One definition of IoC is “an object or activity that, observed on a network or on a device, indicates a high probability of unauthorized access to the system”, in other words, IoCs are signatures of known-bad properties or actions performed by malware. IoCs is useful for forensics intelligence after an attack has occurred but can also provide false positives and their effectiveness is limited to techniques and malware that is currently known by defenders.

On the other hand, Indicator of Attack (IoAs) can be defined as an indicator stating that an attack is ongoing. The indicator resulted from the correlation of deemed malicious actions made by an attacker and and the systems/binaries involved. IoAs cannot be as useful as IoC for forensics purposes but can be much more useful in identifying an ongoing attack.

Bypass Strategy

Knowing some, although very basic, key concepts on common Defenses put in place by EDRs, can help shaping a bypass strategy. Abstracting the technical details and digesting the information keeping an offensive mindset, we could summarize the previously listed Defenses in the following statements:

- Usermode Hooking is applied only to certain APIs and can be circumvented from usermode.

- Kernel Callbacks cannot be circumvented from usermode but are mainly used to provide visibility on newly created process, loaded images and to trigger EDR’s DLL injection into newly created processes.

- Executing C2 payloads will increase the risk for detection by memory scans and may trigger IoCs.

- ML-based detections can assign a bad score to unknown and unsigned binaries, and a better score to signed and widely used binaries.

- IoAs can detect a malicious action by analyzing anomalies of the steps taken in executing that action.

Each of the statements is an approximation and does not fully represent the characteristics of a single Defense, but still provide useful information on key properties that can be exploited for a bypass. I hate analogies when it comes to IT topics, but the statements can be seen as ski-gates for a ski track (bypass) that does not exist yet. We just have to draw one possible track keeping the gates as boundaries.

Main Categories of EDR Evasion operations

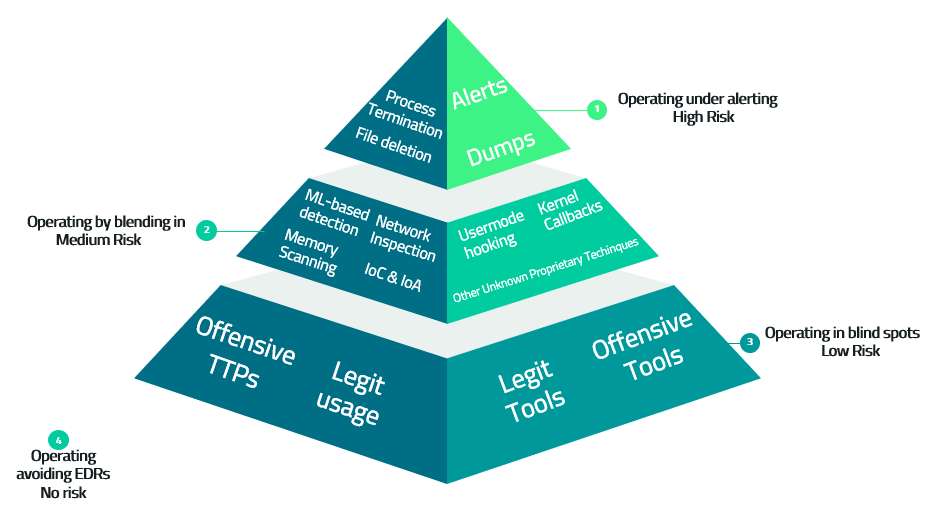

When it comes to evading an EDR, there are four main categories of operations:

- Avoiding the EDR - this can be accomplished by operating from VPN, proxying traffic, or compromising only targets not equipped with EDRs.

- Blending into the environment - Executing operations abusing tools and actions commonly observed in the target network (e.g. administrative RDP sessions, usage of legit Administrative tools, Teams abuse

- EDR tampering - this category involves disabling or limiting EDR’s features or visibility in order to perform tasks without triggering an EDR response or without sending alerts to the central repository. For more details please check this awesome blogpost: “How To Tamper the EDR” by my friend Daniel Feichter @VirtualallocEx

- Operating in blind spots - EDR have finite resources and finite visibility, so blind spots are always present. Operating leveraging blindspots is powerful since it brings the less amount of risk of being detected.

One can translate relate the categories in a corresponding risk for the relevant type of operation. I depicted the risk brought by the type of operation in a Pyramid of Pain (Attacker’s Version), where the layer’s of the Pyramid are ordered by the amount of risk introduced by the Operation type (bottom-up).

|

|---|

| Attacker’s Pyramid of Pain - Mapping risk levels to EDR Evasion category |

It’s usually not always viable avoiding EDRs for the whole operation, especially for multi-month ones. Ideally an attacker would want to operate in the bottom layer of the Pyramid in order to minimize risk of being detected by EDRs, however, this type of operation must be backed techniques and capabilities that usually require some amount of research to identify and exploit blindspots. As attackers, we decided to follow this route and the following paragraphs will outline the strategy employed.

Operational constraints

We should define now some contraints and limitations under which we would want to operate. EDR avoidance actions category are basically ruled out, because we’ll want to focus on finding and exploiting EDRs’ blind spot and also because avoiding EDRs at every stage of an operation is not always feasible. For that reason we’ll want to:

- operate directly on an EDR equipped box without proxying traffic or avoiding to engage with EDRs.

- be able to operate mainly agentless in order to keep memory indicators low and perform common post-exploitation tasks without needing a C2 agent running.

- avoid remote process injection and dropping malicious artifacts on disk for the very same reason of keeping memory indicators low, .

- keep C2 agent execution capability as a last-resort since in some cases we’ll have to accept the tradeoff risk to get extended C2 features available.

To operate in a similar scenario we would need some capabilites in our tooling, such like:

- Dynamic module loading

- Compatibility with community-driven tools

- Traffic tunneling without spawning new processes

Choosing a language

Operations require capabilities that in turn are coded in a programming language. So it makes sense to start first by choosing a programming language that could be functional in finding blind spots AND accelerate capabilities development.

The programming language that would better fit the scenario in which we’ll be operating should have the following requirements:

- the programming language of choiche should be a non-native language (to avoid using custom compiled malicious artifacts) and provide a signed interpreter to execute code.

- it must be possible to execute code without directly install tools on the target machine.

- existing public tooling in that same language could be imported.

- additional capabilities could be developed without much hassle.

- Should provide the least amount possible of optics to EDRs.

The candidates languages were F#, Javascript, C# and Python. However, after having exluded languages with integrated optics into OS (such as AMSI for C# and F#) or with few offensive public tooling available, Python seemed the most promising candidate. As a matter of fact, Python can satisfy the above requirements since:

- Python is an interpreted language and cames officially with a signed interpreter. It’s not tightly integrated with OS optics since Python uses native systems API directly and existing monitoring tools either suffer from limited context or auditing bypass. PEP-578 wanted to solve this issue, since there is no native way of monitoring what’s happening during a Python script execution. However, as we’ll see later, the issue is not solved yet.

- Python.org ditributes Windows Embeddable zip packages containing a minimal Python enviromnet that does not require installation.

- There is a huge amount of public tooling available written in Python that can be imported and used

- Python can provide access to Windows APIs via ctypes and shellcode can be injected into the Python process itself using Python, allowing theoretically the execution of any managed code or the development of any capability in Python (C# assemblies could also be ran using Donut).

The above-listed properties indicates what could be a candidate blindspot within which we can build capabilities and test its effectiveness against EDRs. The fact that currently there isn’t an out-of-the-box way to inspect dynamic Python code execution opens up a very interesting avenue for attackers.

Furthermore, Python is widely used and its (signed) interpreter is executing directly windows API calls depending on the Python code ran. This imply an enormous variety of telemetry and API calls ran from the very same binary (python.exe or pythonw.exe) that brings other precious extra points when it comes to operating undetected with EDRs. In fact, it will likely be difficult for EDR vendors to spot anomalies (and build detections) coming from python.exe when its baseline telemetry is so varied.

All things considered, Python provides some unique opportunities that can be exploited to operate in EDRs’ blindspot.

Leveraging Python

To help operate within the blindspots provided by Python I wrote a tool named Pyramid (available on my github). The tool’s aim is to leverage Python to operate in the blindspots identified previously by currently using four main techniques:

- Execution Method - Dropping and running python.exe from “Windows Embeddable Zip Package”.

- Dynamic in-memory loading and execution of Python code.

- Beacon Object Files execution via shellcode.

- In-process C2 Agent injection.

Execution Method

The execution method for our techniques should be aimed at creating the less amount possible of suspicious indicators that could trigger an anomaly or a detection. Thinking about the Defenses, one could trick ML-detections by using the signed Python interpreter and IoAs by avoiding to create uncommon process tree patterns.

So the most simple way to achieve this would be dropping the Windows Embeddable zip package on a user folder or share and launching directly python.exe (or pythonw.exe) without spawning it from C2 agents or unknown binaries. This acton would mimick a common execution for Python and wouldn’t likely be flagged as malicious by EDRs.

Dynamic in-memory import

The technique of importing dynamically in-memory Python modules has been around for quite some time and some great previous work has been done by xorrior with Empyre, scythe_io with in-memory Embedding of CPython, ajpc500 with Medusa.

The core for Dynamic import is the PEP-302 “New Import Hooks” that is describing how to modify the logic in which python modules are located and how they are loaded. The normal way of Python to import module is to use a path on disk where the module is located. However, we want to import modules in memory, not from disk.

Import hooks allow you to modify the logic in which Python modules are located and how they are loaded, this involves defining a custom “Finder” class and either adding finder objects to sys.meta_path sys.meta_path holds entries that implement Python’s default import semantics (you can view an example here)

So basically to use PEP-302 and be able to import modules in-memory one should:

- Use a custom Finder class. Pyramid finder class in based on Empyre one.

- In-memory download a Python package as a zip.

- Add the zip file finder object to sys.meta_path.

- Import the zip file in memory.

There are some limitations though, firstly PEP-302 does not support importing python extensions (*.pyd files) and secondly if you are in-memory importing a package with lot of dependencies this will bring conflicts between them (dependencies nightmare) and will be needed to sort them out.

The first problem is the most complex one, since to in-memory import *.pyd files the CPython interpreter needs to be re-engineered and recompiled (that’s what scythe_io did), hence losing the precious digital signature. We can avoid losing the Python interpreter digital signature by dropping on disk the *.pyd files needed for the Python dependency that we want to import in-memory.

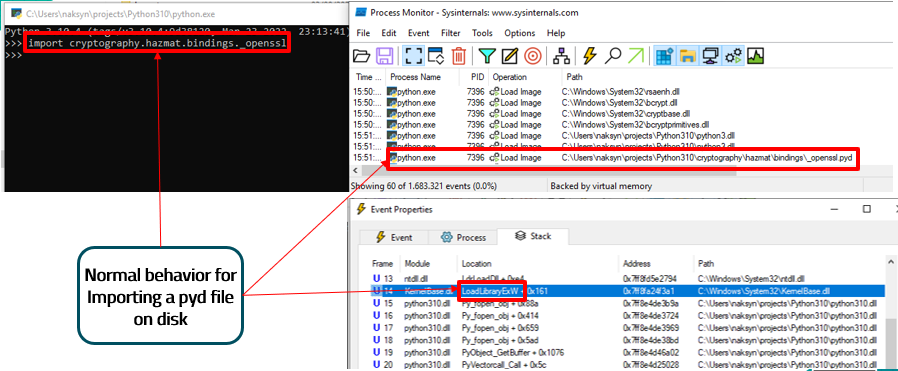

In fact, looking at the normal Python behavior when it comes to importing *.pyd files (that are essentially dlls), we can see that under the hood they are loaded using the windows API LoadLibraryEx and taking the path on disk. We can accept a tradeoff and import pyd files by dropping them on disk and continue importing in-memory all the other modules that do not require *.pyd files. This will allow us to maintain the interpreter digital signature and we’ll use the normal Python behaviour in loading the extensions.

|

|---|

| Normal Python behaviour for loading pyd files |

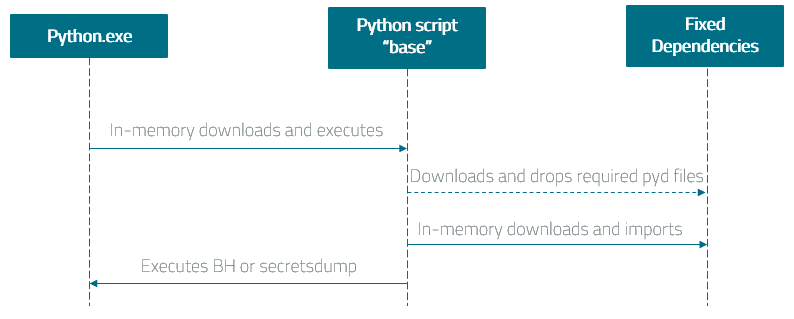

The second problem has been solved by manually addressing every dependency issue while importing the packages python-bloodhound, paramiko, impacket secretsdump and providing the fixed dependencies in Pyramid to use with a freezed version of the target packages. The technique execution flow is depicted in the following scheme:

|

|---|

| Dynamically importing and executing BloodHound-Python/secretsdump with Pyramid |

Here’s a demonstration of using Pyramid to run Python-BloodHound from Python.exe after having imported in-memory its dependencies. Only the Cryptodome wheel has been dropped on disk because it contains pyd files used by BloodHound.

In the following video Pyramid has also been used to dynamically in-memory import impacket-secretsdump.

.

Beacon Object File execution via shellcode

This technique has already been introduced in my previous blogpost, however, the TL;DR is that we can use COFFloader and BOF2Shellcode to execute Beacon Object Files via shellcode. The shellcode can then be injected directly into python.exe using Python and ctypes.

We can dump lsass directly from Python.exe using nanodump, but we need to modify it a bit in order to work with our technique. Since we’ll be executing a BOF without a Cobalt Strike Beacon running, we should get rid of all the internal Beacon API call because otherwise the BOF will crash. We should also hardcode command line parameters to increase BOF execution stability thus getting rid of command line parsing functions. Finally, we can choose our preferred method of dumping lsass and hardcode it too.

Bear in mind that with this technique no pyd files are dropped on disk.

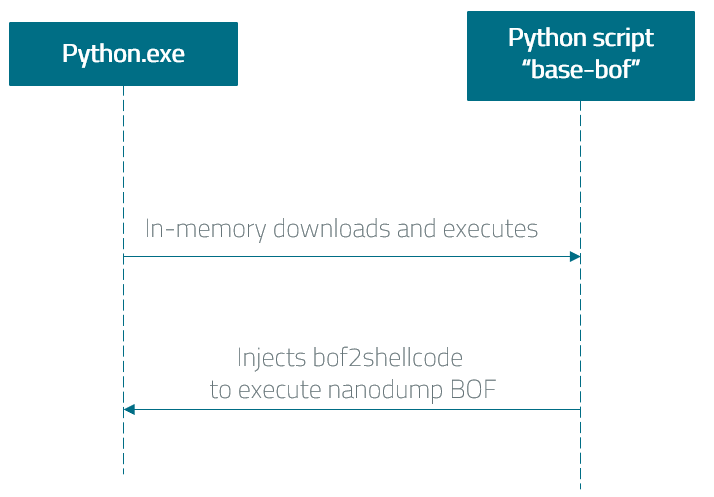

The technique execution flow is depicted in the following scheme:

|

|---|

| Dumping LSASS with Pyramid and nanodump |

In the following video Pyramid has been executed to dump lsass on a machine equipped with a top-tier EDR (details have been blurred and I won’t name EDR product) using nanodump BOF and process forking technique.

You can find the modified nanodump used for the demo here on my github

In-process C2 agent injection

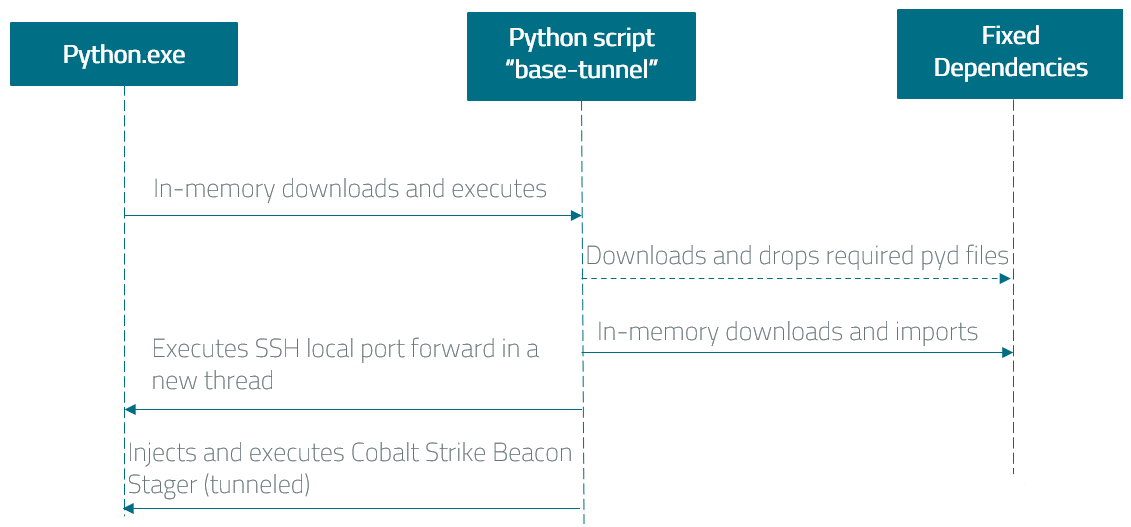

Executing a C2 agent increase chances of detection by memory scans, however certain scenarios might require an agent execution for the operation to continue. For this reason Pyramid provide the capability of executing a C2 agent stager and tunnelling its traffic through SSH, all within the python.exe process. This is achieved by first dynamically importing paramiko and then starting SSH local port forwarding to an attacker controlled SSH server in a new local thread.

The C2 agent shellcode is then injected and executed in-process. The stager should be generated using the host 127.0.0.1 as C2 server with the same port opened locally by the SSH local port forward. The technique execution flow is depicted in the following scheme:

|

|---|

| In-process tunneling a Cobalt Strike Beacon with Pyramid |

In the following video Pyramid has been executed to perform SSH local port forwarding and executing a Cobalt Strike Beacon stager tunneling its traffic over SSH. The OS was equipped with a top-tier EDR also in this case.

Conclusions

It has been demonstrated that Python provides some key properties that effectively creates blindspots for EDR detection, namely:

- Python’s wide usage creates a varied baseline telemetry for Python interpreter that is natively running APIs. This can increase the difficulty for EDRs’ vendor to spot anomalies coming from python.exe or pythonw.exe.

- Python lacks transparency for dynamic code executed from python.exe or pythonw.exe.

- Python Foundation officially provides a “Windows embeddable package” that can be used to run Python with a minimal environment without installation. The package comes with signed binaries.

These properties coupled with operational capabilities such as BOF execution, dynamic import of modules and in-process shellcode injection can help operating into EDRs’ blindspot. Pyramid tool has been developed trying put together all the concepts presented in this post and bringing operational capabilities to be used from the Python Windows embeddable package.

How to defend from this

One obvious way to defend from these techniques would be to flag Python interpreters as Potentially Unwanted Application, forcing EDR customers to investigate alerts and approve or deny Python usage for specific users. However I don’t think that it’ll be feasible in every situation. Attackers could also bring their own interpreter and still use these techniques, but in doing so they’ll lose the Interpreter digital signature, so the attack effectiveness will probably be downgraded.

As an EDR vendor, I would also want to analyze python.exe and pythonw.exe behaviour without biases brought by the varied baseline telemetry that they would have. In this way the Python binaries will be treated as if they were unknown, which is in fact true regarding their behaviour because API calls made by the interpreter are related to the Python code executed.